AI ethics: moving from aspirations to specifics

An Atlantic Council paper provides a clear and practical framework for leaders to move beyond platitudes into the hard ethical challenges AI is creating

As Artificial Intelligence (AI) systems become commonplace in business and society, they are engendering complex debates concerning the ethical policies and rules that should govern their design, deployment, and power. Many big tech companies such as Google and Microsoft have articulated general principles that should guide their AI teams, but these efforts have, to date, mainly provided aspirational guidance. Moreover, even companies as rich and talented as Google have struggled when moving from general statements of intent—e.g., AI systems should not discriminate on the basis of race—to specific rules and development strategies.

Because the newness and complexity of AI systems are posing serious hurdles to technologists and ethicists, some regulators have decided they must move forward and create regulatory answers to AI's tough questions. In places as diverse as the European Union and China, the past few years have seen the proposal of new AI regulations that — in the case of the EU at least—would significantly constrain what AI developers and their customers can and cannot do with these new technologies. The pressing challenge for both private and public stakeholders in the AI ecosystem is to accelerate past today's general statements of intent to specific rules and guidelines that are consistent with social norms, goals, and established legal principles.

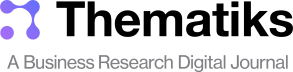

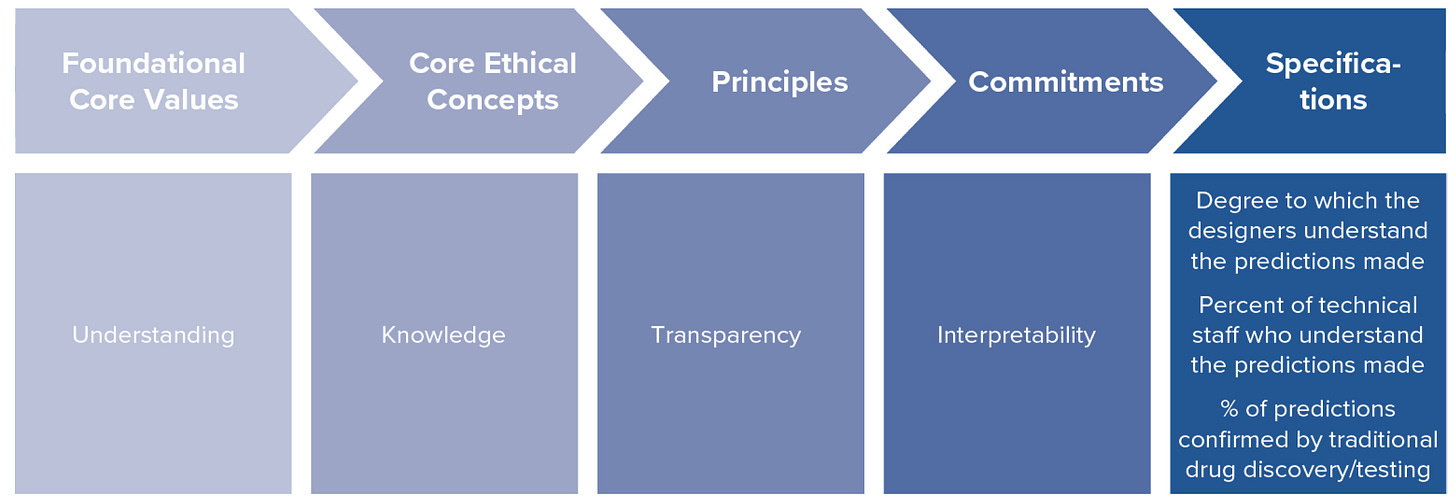

Seeking to support this effort is a proposal from the Atlantic Council's Geotech Center written by John Basl (Northeastern), Ronald Sandler (Northeastern), and Steven Tiell (Accenture). Their paper provides organizations working with AI systems a framework for developing high-level statements of intent into specifics. As shown in Figure 1 below, their approach is built on the concept of normative content. As the authors note, norms come in two types. One is descriptive, i.e., describe "what is." The other form is prescriptive, i.e., they describe "what should be." One category of prescriptive norm is ethics, and ethical norms are the foundation of their model. In the context of this paper, ethical norms should serve as the foundation of "well-justified standards, principles, and practices for what individuals, groups, and organizations should do, rather than merely describing what they currently do."

Figure 1: Framework for cascading ethical norms into specific attributes of AI systems (Source: Authors)

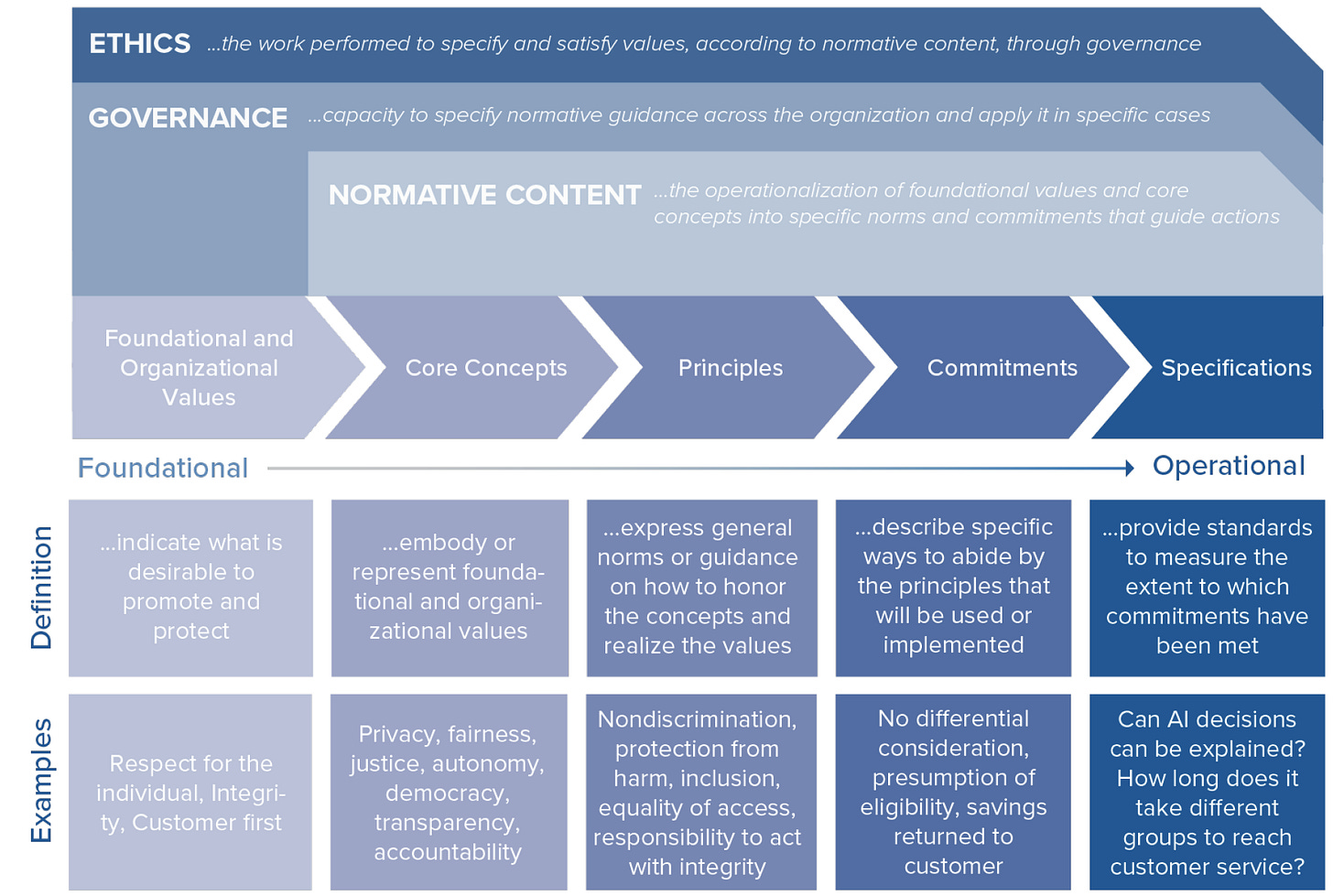

The authors illustrate their approach with an example from healthcare, specifically the idea that any patient who participates in a medical trial should have given informed consent. For the authors, informed consent— as a specific practice—flows down from a higher-order norm they identify as respect for the individual patient. In other words, the reason informed consent exists is that research institutions are committed to enabling and respecting a patient's individual right to be the ultimate arbiter of his own medical care. As shown in Figure 2 below, the high-order norm of respect for the individual leads to accepting the ethical concept of autonomy, which then leads to the commitment to require informed consent for any clinical trial.

Figure 2. Example: Ethical framework for respecting autonomy in medical research (Source: Authors)

For the authors, informed consent in bioethics has several lessons for moving from general ethical concepts to practical guidance in AI and data ethics:

To move from a general ethical concept (e.g., justice, explanation, privacy) to practical guidance, it is first necessary to specify the normative content (i.e., to specify the general principle and provide context-specific operationalization of it).

Specifying the normative content often involves clarifying the foundational values it is meant to protect or promote.

What a general principle requires in practice can differ significantly by context.

It will often take collaborative expertise—technical, ethical, and context-specific—to operationalize a general ethical concept or principle.

Because novel, unexpected, contextual, and confounding considerations often arise, there need to be ongoing efforts, supported by organizational structures and practices, to monitor, assess, and improve operationalization of the principles and implementation in practice. It is not possible to operationalize them once and then move on.

With these lessons in mind, the authors look at two ethical norms common to AI policies today and apply their framework for their development.

Issue 1: Justice

AI ethics frameworks generally subscribe to the idea that these new technologies should, at the very least, not make the world more unjust. Ideally, the adoption of AI should make the work more just in some way. But what, the authors ask, does justice mean in the world of AI? The complexity of the concept suggests to them that "to determine what justice in AI and data use requires in a particular context— for example, in deciding on loan applications, social service access, or healthcare prioritization—it is necessary to clarify the normative content and underlying values." For the authors, justice as an abstract idea seems to be of little value. It is only when the norm of justice is placed in a specific context that it is "possible to specify what is required in specific cases, and in turn how (or to what extent) justice can be operationalized in technical and techno-social systems."

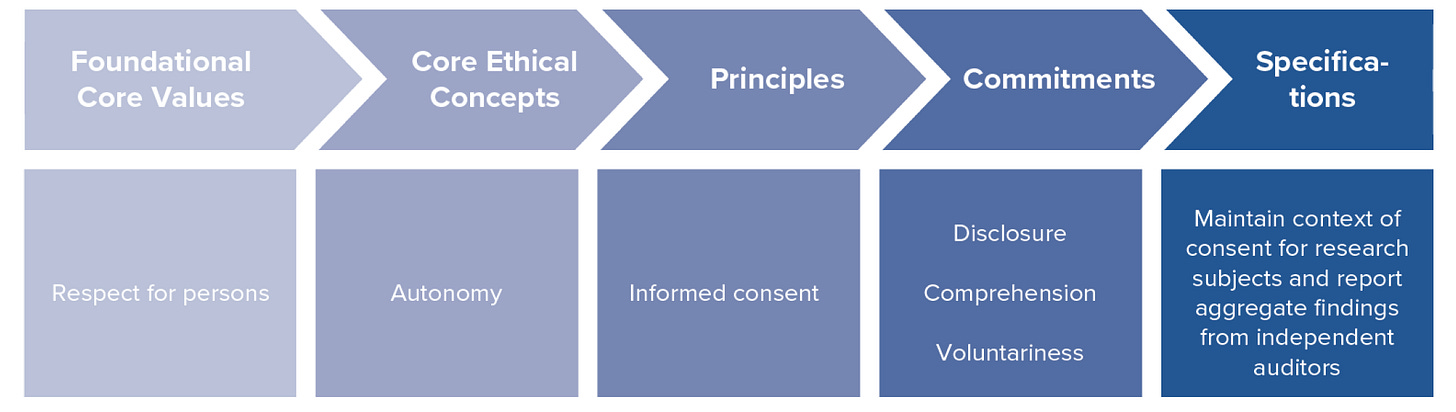

As shown in Figure 3 below, they explain their approach by looking at a hypothetical AI application in the financial services industry.

Figure 3: Example: Ethical framework for algorithmic lending (Source: Authors)

In this case, the firm—whether for internal reasons or regulatory requirements—commits to nondiscrimination, equal treatment, and equal access in deploying, for example, an AI-based mortgage approval algorithm. To ensure that its high-level norm is met, the firm also adopts a series of commitments and system specifications designed to align with the overall norm and the specific challenge of justly approving the right mortgage applicants. In discussing their hypothetical model, the authors note that the specifics presented may not be exhaustive. For example, "if there has been a prior history of unfair or discriminatory practices toward particular groups, then reparative justice might be a relevant justice-oriented principle as well." Or if a firm has a social mission to promote equality or social mobility, then "benefiting the worst-off might also be a relevant justice-oriented principle."

For all cases, however, the authors highlight two key points:

First, there is no singular, general justice-oriented constraint, optimization, or utility function; nor is there a set of hierarchically ordered ones. Instead, context-specific expertise and ethical sensitivity are crucial to determining what justice requires. A system that is designed and implemented in a justice-sensitive way for one context may not be fully justice-sensitive in another context, since the aspects of justice that are most salient can be different.

Second, there will often not be a strictly algorithmic way to fully incorporate justice into decision-making, even once the relevant justice considerations have been identified. For example, there can be data constraints, such as the necessary data might not be available (and it might not be the sort of data that could be feasibly or ethically collected). There can be technical constraints, such as the relevant types of justice considerations not being mathematically (or statistically) representable. There can be procedural constraints, such as justice-oriented considerations that require people to be responsible for decisions.

Issue 2: Transparency

A significant concern for AI regulators is transparency, i.e., the ability for the creators and users of AI to understand how the systems operate and reach decisions. As with justice, however, transparency is a term that can signify different things to different people. Thus, translating calls for transparency into guidance for the design and use of algorithmic decision-making systems "requires clarifying why transparency is important in that context, for whom there is an obligation to be transparent, and what forms transparency might take to meet those obligations."

The authors discuss several principles that could be used to translate the high-level idea of transparency into commitments and specifications. These include "explainability," i.e., an AI maker commits to not making a system whose inner workings cannot be explained to outsiders. Another principle might be "auditability," i.e., creating the ability for an internal or external review of an AI's operating and decision history. A third and critical issue is "interpretability," the commitment not to create AI whose capabilities are beyond the understanding of even its creators. This phenomenon is not a theoretical problem; it is already here, as a 2017 MIT Tech Review article about an AI-controlled autonomous car reported:

Getting a car to drive this way was an impressive feat. But it’s also a bit unsettling, since it isn’t completely clear how the car makes its decisions. Information from the vehicle’s sensors goes straight into a huge network of artificial neurons that process the data and then deliver the commands required to operate the steering wheel, the brakes, and other systems. The result seems to match the responses you’d expect from a human driver. But what if one day it did something unexpected—crashed into a tree, or sat at a green light? As things stand now, it might be difficult to find out why. The system is so complicated that even the engineers who designed it may struggle to isolate the reason for any single action. And you can’t ask it: there is no obvious way to design such a system so that it could always explain why it did what it did.

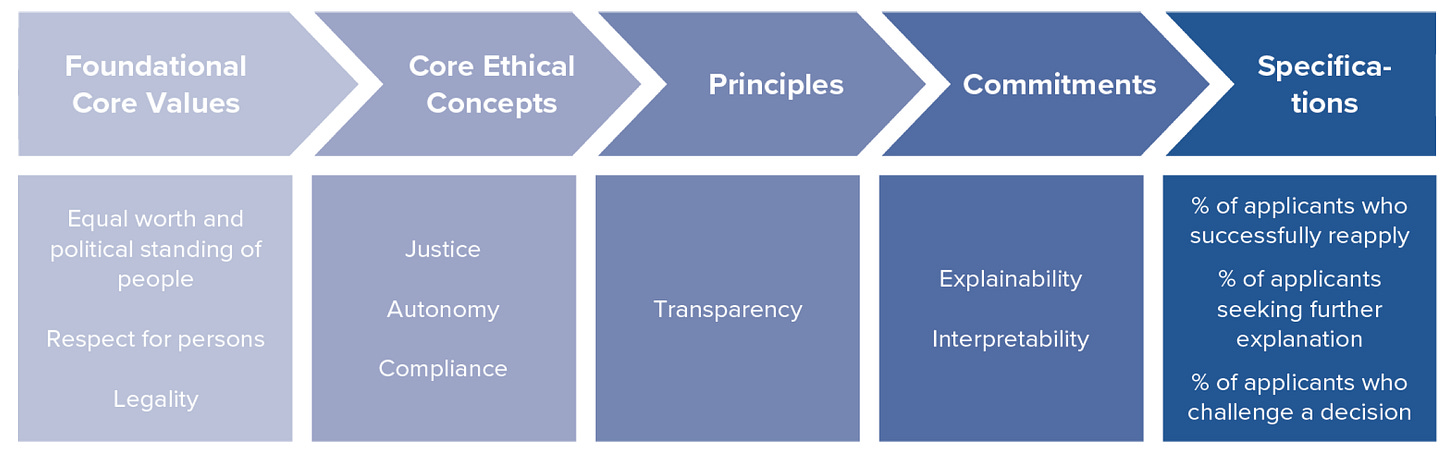

As an example of how to address issues such as those noted above, Figure 4 below illustrates how the authors’ framework cascades a first-order value—understanding—through a drug discovery AI system so that its final specifications align with and support the original goal.

Figure 4: Example: Ethical framework for a drug discovery algorithm (Source: Authors)

A similar approach, the authors suggest, can be used to expand notions of audibility and explainability from value statements into specific system attributes at the operational level.

Conclusions

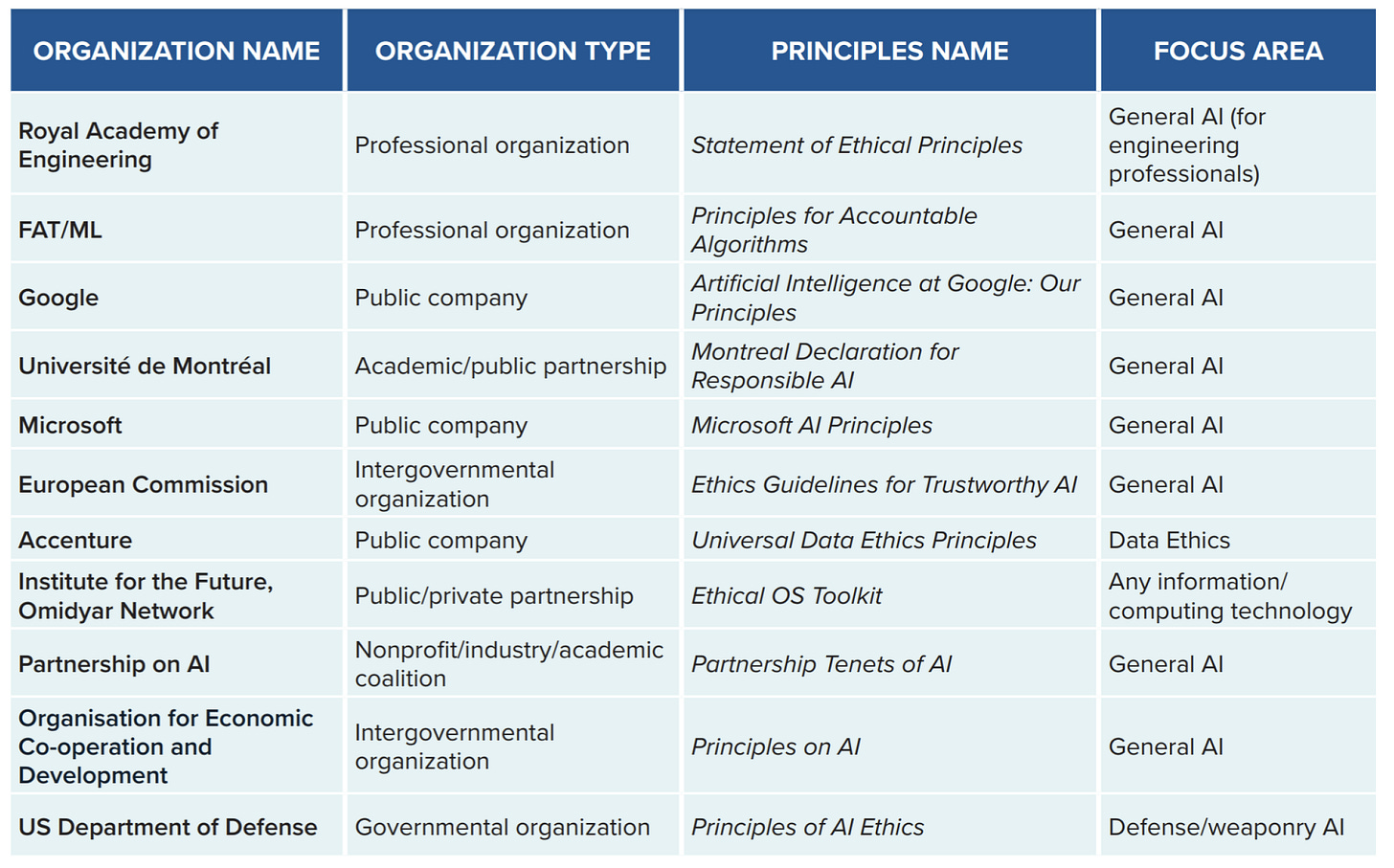

As shown in Figure 5 below, institutional commitments to high-level AI principles are becoming commonplace. However, these right-sounding statements all too often lack specifics about the policies and technical decisions that must be adopted in order to make the high-level goals a reality. The authors conclude their paper by noting that "if these concepts and principles are to have an impact—if they are to be more than aspirations and platitudes—then organizations must move from loose talk of values and commitments to clarifying how normative concepts embody foundational values, what principles embody those concepts, what specific commitments to those principles are for a particular use case, and ultimately to some specification of how they will evaluate whether they have lived up to their commitments."

Figure 5: Examples of AI principles, codes, and value statements (Source: Authors)

It is common to hear organizations claim to favor justice and transparency, the authors note, but "the hard work is clarifying what a commitment to them means and then realizing them in practice." This paper provides a solid point from which to start the "hard work" noted above. Some issues deserve attention in future work from this team. For example, the authors repeatedly stress the importance of "context," but making ethics contextual opens up the risk that AI ethics slide down the slippery slope of moral relativism. It would be useful to understand where and how to draw the line when granting contextual exceptions to global AI policies. This challenge is especially true of technologies such as facial recognition, which are widely adopted in countries such as China but are the subject of intense criticism in the West. In addition, the authors did not include symmetrical identification in their discussion of transparency. The idea that AI should make itself known to those who interact with it is embedded in the new EU AI regulations, so it was surprising not to see the topic included in their discussion on transparency.

Another issue the authors do not discuss relates to the ethical implications of AI's inevitable unintended consequences. As with all great technological shifts, AI will generate effects that no one expected. In fact, the recent case of Airbnb’s price optimization algorithm is a case in point. The tool worked as intended; however, because Black hosts were 41% less likely than White hosts to adopt the algorithm, it increased the racial revenue gap at the macro level. In other words, even an AI that did what it was supposed to do ended up making the overall platform less just. It would be useful to know if the authors think AI ethical systems should define the responsibility for such outcomes beforehand? If so, how?

As noted above, this paper does not address some critical issues in the current AI ethics debate. However, those omissions are likely a result of the project's limited scope, which was to provide a framework for development and not the answers themselves. As such, the paper succeeds in its aim. It provides a framework that both executives and technologists can use to have serious discussions about the ethical implications of their AI creations. As the authors note, "the challenge of translating general commitments to substantive action is fundamentally a techno-social one that requires multi-level system solutions incorporating diverse groups and expertise." This brief but thoughtful analysis is a solid starting point for business and social leaders wishing to take on this challenge.

The Research

John Basl, Ronald Sandler, and Steven Tiell. Getting from Commitment to Content in AI and Data Ethics: Justice and Explainability. Atlantic Council Report, Aug 2021, Available at https://www.atlanticcouncil.org/in-depth-research-reports/report/specifying-normative-content/